Applying Simple ML to Simplify Behavioral Surveys for Real-World Decision Science

Self-Service Notebook in Github -> https://github.com/glombardo/Research/blob/main/Understanding_Self_Control_Failures_in_Everyday_Decisions.ipynb

Live Survey Data (feel free to download and run with the notebook!) -> https://docs.google.com/spreadsheets/d/1SmHMxUkMJ9m1FaNvVjtMSxM-AGkk-ftRZkovc4zZFac/

🧩 The Problem: Behavioral Biases Don’t Live in Silos

Behavioral science has identified many cognitive biases that explain why people make poor long-term decisions (like overspending, procrastinating, or abandoning health goals). But in real life, these biases don’t operate in isolation. A person struggling with savings may not only be impulsive (present-biased) but also overly confident about future behavior (over-optimistic) or anchored to past losses (reference-dependent).

That complexity presents a challenge:

❓ How do we efficiently measure overlapping behavioral traits without overwhelming respondents?

🧪 The Hypothesis

Even though present bias, projection bias, reference-dependent utility, and over-optimism are well-defined in theory, they often blur together in practice. This project tests whether a compact survey can still capture the underlying structure of these traits, and whether we can reduce question count without sacrificing insight.

📝 What Was Done

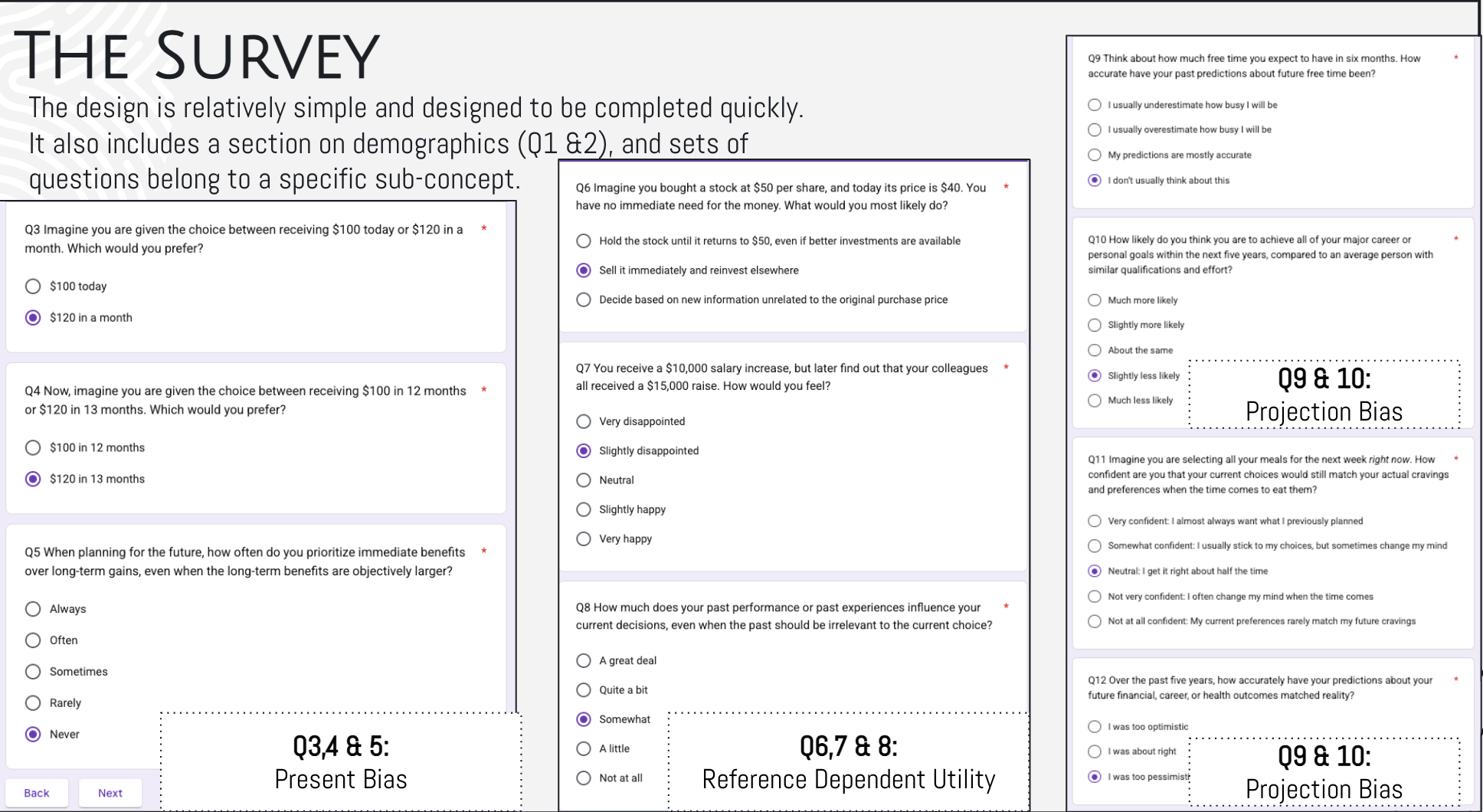

Guido designed a lean behavioral survey focused on four cognitive biases:

Present Bias – Favoring immediate rewards over future ones

Reference-Dependent Utility – Letting past outcomes shape current choices

Projection Bias – Assuming your future preferences will match today's

Over-Optimism – Believing your outcomes will beat the odds

Each bias was initially measured with 2–3 short questions, plus demographics.

🔍 What Tools Were Used

The project applied Principal Component Analysis (PCA), a statistical method that identifies clusters of related responses—essentially testing whether the survey items group naturally into the behavioral dimensions they were meant to measure.

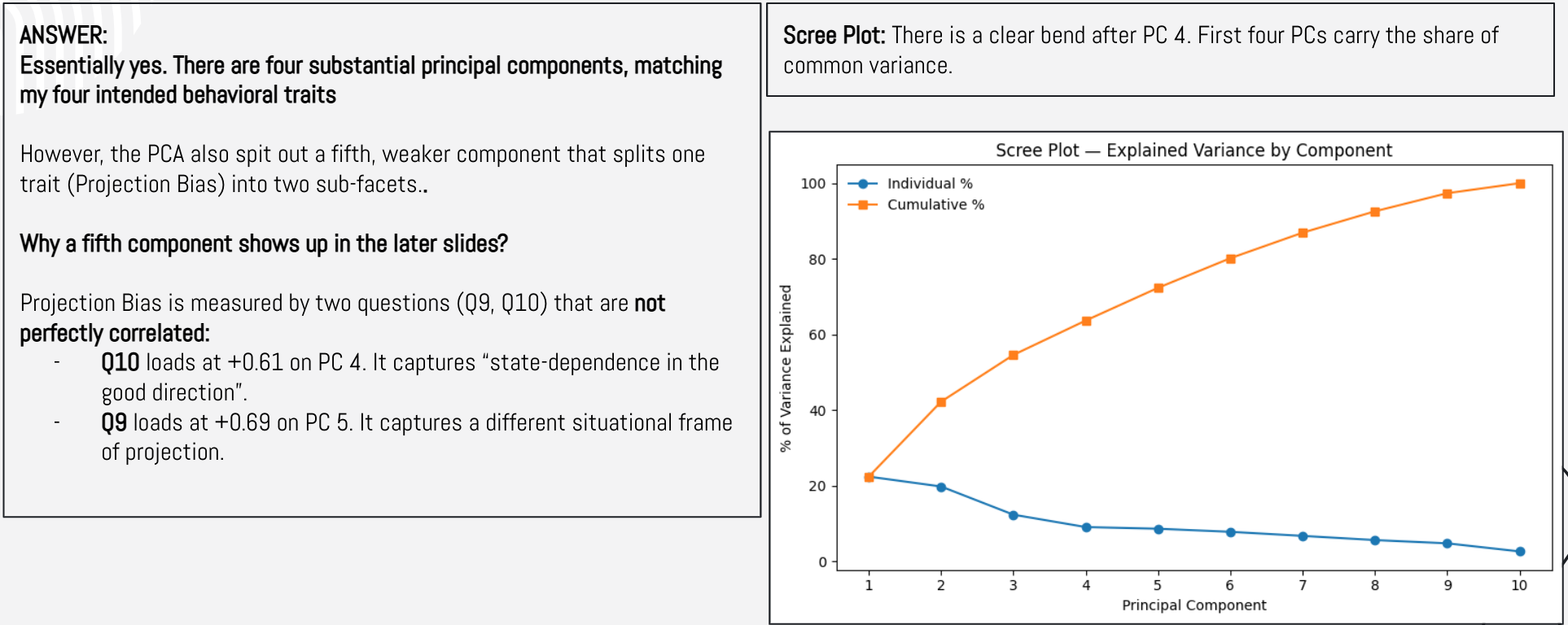

Are there as many PCs as the behavioral traits your proposed to measure?

Do the questions map nicely on the pcs?

📊 What the Data Showed

The analysis revealed four main dimensions—largely aligning with the original behavioral constructs.

Interestingly, Projection Bias split into two subtly different sub-patterns, suggesting real-world variation in how people mispredict future behavior.

A few questions co-loaded across dimensions, confirming that these biases interact and overlap, just as hypothesized.

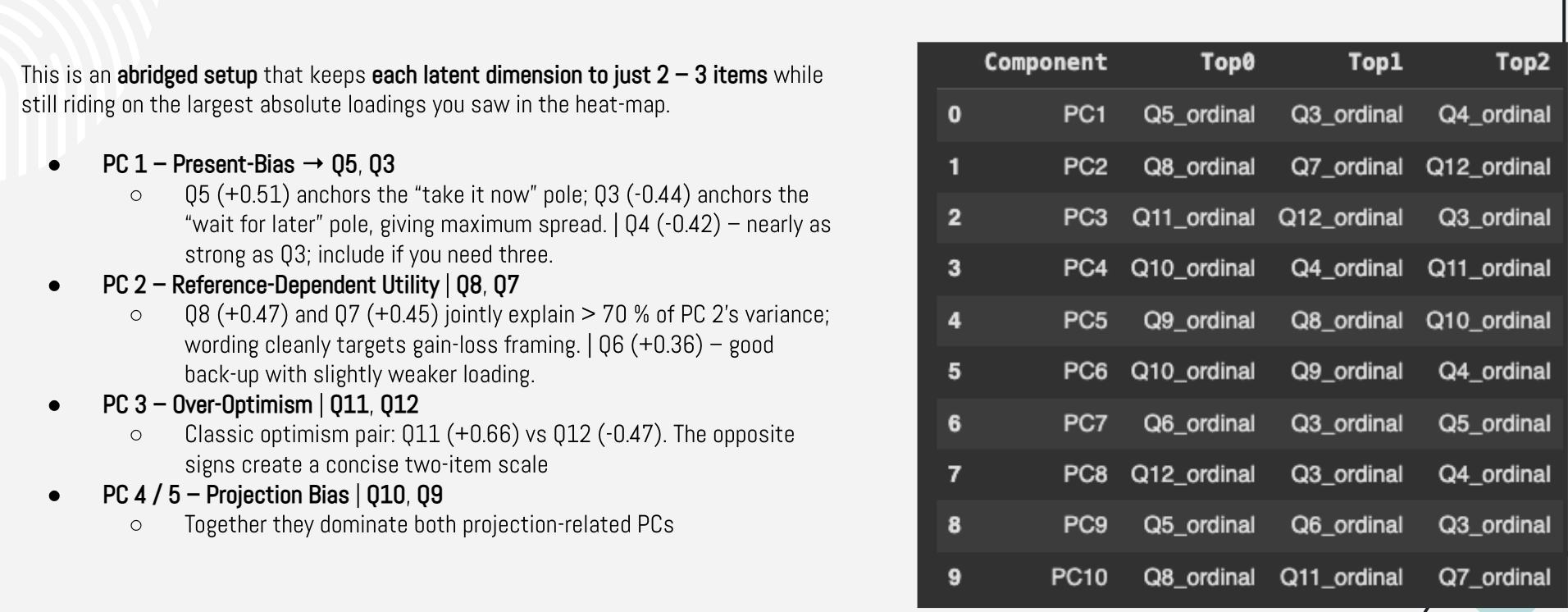

Can we generate an abridged survey that is more efficient while capturing the needed variability?

✂️ Why Simplification Matters

In practice, long behavioral surveys are rarely feasible. Whether in apps, clinical settings, or consumer research, time is limited. This project shows that:

✅ You can capture complex cognitive traits with fewer questions

✅ Interacting biases can be statistically disentangled with the right tools

✅ Survey length can be reduced without losing key signal

By trimming the original battery down to 2–3 strong items per bias, the revised version becomes more usable for:

Field experiments

User onboarding diagnostics

Behaviorally-informed product design

Scalable academic studies